This article was written for the "Issues" section of Omalius magazine #28 of March 2023.

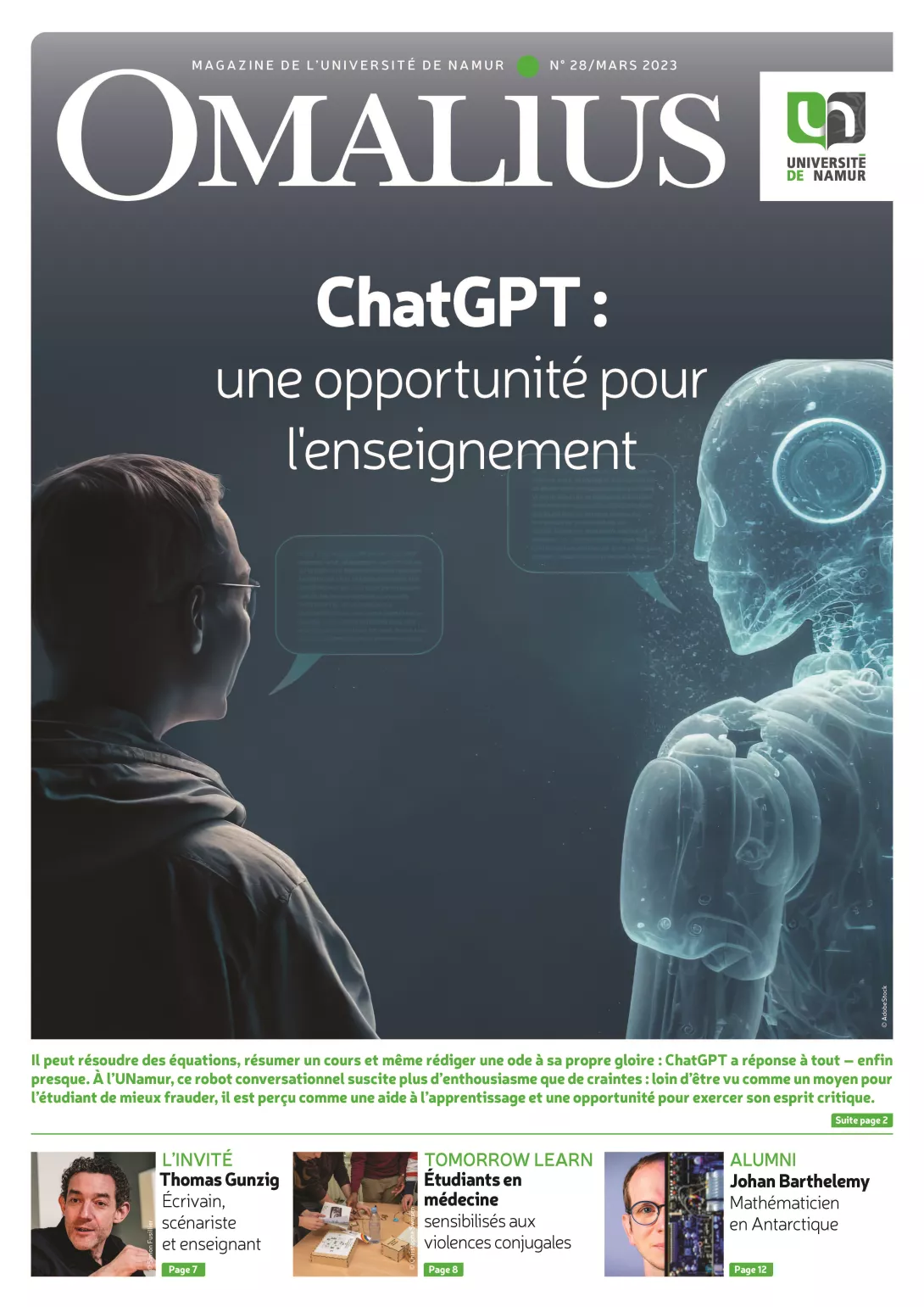

It's true that this chatbot that seems to think and write in real time - like a friend you would chat with on Messenger or Whatsapp - is as intuitive as it is surprising. Completely free and accessible with a simple email address, it is capable of calculating, producing a recipe, writing articles and composing poems : "Oh ChatGPT, source of infinite knowledge, You are our guide in the digital world, Providing us with your endless knowledge, You are the source of our logical intelligence". Also capable of explaining to you with unfailing aplomb that cows are oviparous or of putting forward bibliographical references that one would swear are authentic and yet inaccurate. The tool is based on the principle of plausibility, not truth. "ChatGPT works on the basis of a probabilistic equation," explains Bruno Dumas, professor at the Faculty of Computer Science at UNamur and co-chair of the NaDI Research Institute. "It will knit together the most likely sequence of words based on what you have given it as a question. This sequence of words will form sentences that are meaningful and interesting because ChatGPT has been trained on a lot of data, some of which is known: all of Wikipedia in English, the entire social network Reddit, two large databases of books, the equivalent of a huge library, and the rest of the Internet, including Twitter.

Personal assistant

In a world where the relationship with the truth is already very poor, fears linked to plagiarism, cheating, the fabrication of false evidence and sources, and the standardization of thought are legion. But since fear does not remove the danger, teachers at the UNamur quickly thought about how best to integrate the conversational robot into their courses. "There is always enthusiasm for a new tool, but you have to be critical," says Guillaume Mele, a researcher and assistant in the university's Education and Technology Department and a member of the IRDENa research institute. "We went through an analysis phase to realize the possibilities offered by ChatGPT but also its limits. It's a great tool, but it should remain a tool, not the heart of a teaching system."

“Personally, I am a great believer in the idea of companionship in teaching, in the one-to-one relationship: from this perspective, ChatGPT can be seen as an opportunity for the student to have a personal, digital assistant at home," suggests Michaël Lobet, a professor in the Department of Physics at UNamur and an FNRS-qualified researcher at the NISM Research Institute. "Of course, we are not there yet, but if our students manage to use the tool correctly, even if it is only to clear some of the material, it will be very good. We can envisage different pedagogical scenarios: they could use it for a course summary, mindmapping, redefining certain concepts... Now, I am still sceptical and cautious because we are in the initial phase. I submitted three physics problems to ChatGPT and it failed on two of them...". However, the aim is not to "trap" ChatGPT: that would be to make it a rival and forget that it is only a machine that promises to improve. "If I use a hammer, I don't compete with the hammer," says Michaël Lobet. On the other hand, there is nothing like it for driving a nail.

Critical thinking

Of course, ChatGPT will introduce change, as will its competitors, led by Bard, Google's conversational tool. But just as education and research survived Wikipedia, they could survive these intelligent chatbots. "With Covid, we had to introduce Teams as a matter of urgency, which took everyone out of their comfort zone and changed the way we teach, but not only in a negative way. It also brought more pedagogical reflection and helped teachers' digital skills to evolve, resulting in some impressive pedagogical devices. There were fears, then a phase of taming until everyone used it (or not) according to their needs. This is probably what will happen with ChatGPT," says Guillaume Mele.

Although many professors are already interested in it, ChatGPT remains unknown to many students. Marie Lobet, an assistant in the Department of Education and Technology (DET) at the UNamur, has carried out an initial study which shows that only a third of them have heard of it. So this is a good time for introductions. "ChatGPT can quickly turn students off if they don't use it critically," says Olivier Sartenaer, philosopher of science at UNamur. "This is why I suggest that they use it and cite it as a source, in the same way as a classic source, by putting the results obtained in the appendices. This is a way to use the tool honestly, showing where it is right and where it is wrong. As in all investigative work, it must obviously be considered as one source among others and as an unreliable source since it works on the principle of probability and not of truth...". For its part, ChatGPT does not source any of the information it provides, which could pose intellectual property problems. Above all, the results it proposes should be perceived as impure raw material: a clay that needs to be shaped and reshaped if we want to get closer to what is right, true, or even beautiful: "Oh ChatGPT, You are a technological jewel, A marvel that makes our lives easier, You save us precious time,And guide us towards success."

Élise Degrave, professor at the Faculty of Law of UNamur and member of the NaDI Institute, also believes that it is necessary to introduce students to this tool and that it would even be "dangerous to prohibit it": "We have to get away from the stereotypes according to which teachers would necessarily be old, disconnected nerds and students necessarily frauds... Our job is to teach students to evolve with the tools that exist because they are the ones who will make the jobs evolve later on. This is all the more important as many students, even if they are very comfortable with social networks, do not have the culture of digital tools: many do not know what a filter bubble is (editor's note: a system for personalising search results) or that on Tinder, the algorithm associates good-looking people with good-looking people." This knowledge is all the more necessary as artificial intelligences are probably going to become more and more important in our lives. So we might as well make them our allies. For as ChatGPT writes to itself in the last stanza: "We address this ode to you with love, You, our dear virtual friend,You are a part of our daily lives, And we could not replace you."

ChatGPT: a prescriptive tool?

ChatGPT has an answer for everything and never gets angry. It's impossible to get him out of his depth or to plunge him into the abyss of doubt. "ChatGPT's answers have a stereotypical appearance. And it is a black box: we know that the tool has 'eaten' the whole of Wikipedia, but in English, which is not neutral," comments Olivier Sartenaer. The question at the basis of social sciences - where am I talking from? -the conversational robot doesn't care. "Usually, we can try to see why a source tells us such and such a thing, but this is not the case here because the statement has no author. It is therefore impossible to contextualise the statement. The most we know, following an investigation by Time, is that OpenAI worked with Kenyan workers, through the company Sama, to eliminate the racist, sexist and hateful content that ChatGPT could have fed on... "These workers were paid two dollars an hour to confront the dregs of humanity. This obviously raises questions", Bruno Dumas comments.

Neither empathy nor transgression

"For example, if you ask it how to build bombs, it will tell you that if you have a problem, you can consult a health professional... But who defines these standards? From a legal point of view, it's interesting," says Elise Degrave. In his view, ChatGPT is most notable for its inability to transgress these standards. "In law, the notion of good morals has been defined by the same article of the civil code since 1830, except that before, what was contrary to good morals was concubinage, and now, to caricature, it is to sell one's eggs on the internet... Society is changing, but ChatGPT is incapable of interpreting and changing the norms. He is also incapable of thinking about force majeure. In law, for example, it is forbidden to speed unless it is to rescue a person in danger." Royally psychorigid, ChatGPT ignores the exception and empathy. In this sense, according to Élise Degrave, it is "a magnificent plea for the role of the human being". Because incapable of the worst, ChatGPT is also incapable of the best.

Interview with Laurent Schumacher, Vice-Rector for Education

At the end of January, UNamur organised a PUNCh (Pédagogie Universitaire Namuroise en Changement) session dedicated to ChatGPT which was a real success, with some 260 participants...

Laurent Schumacher : Yes, out of 600 or 700 people involved, this is significant. We had strong forces that developed a resolutely positive approach to the tool, based on the postulate that it was integrated into the practices of future professionals and that it was appropriate to see to what extent it could be used for learning and career purposes. The various speakers presented the tool, but also its limitations and the scenarios that made it possible to implement it. The aim was to pool and share good practice.

What could ChatGPT bring to the academic world?

L.S.: Typically, when we do research, ChatGPT facilitates the creation of an initial state of the art. The first limitation is that it can only return what it already knows, in other words the knowledge available at the time it collected the information, in this case at the end of 2021, beginning of 2022. Nor can one take what ChatGPT says at face value: it requires a critical eye on the part of the student. The fact that it presents itself as a conversational tool also gives a different view of the answers it provides: you have the impression of actually having someone in front of you, whereas their answer is nothing more than a path between words. So the path could be different and the resulting text could say the exact opposite.

Do you have any fears about the risk of plagiarism and fraud?

L.S.: It would be excessive to say that we have no fears. But we are now in a different relationship to the availability of knowledge. Of course, we cannot imagine training doctors who have no knowledge of the human body, but we can imagine a scenario in which the doctor acts in an alliance between his own knowledge and what he can glean from digital resources: it is then more a question of augmented reality, of an increase and not a substitution.

Omalius

This article is taken from Omalius magazine #28 (March 2023).